Point Cloud Detection and Semantic Understanding in Robotics

Point cloud detection refers to the process of capturing 3D spatial data through sensors like LiDAR, forming a "point cloud" where each point represents a measurement from an object’s surface. Traditional point cloud detection allows robots to perceive obstacles, walls, and objects, aiding navigation and obstacle avoidance. However, point cloud data primarily provides geometric information about space, lacking deeper semantic understanding of the environment.

Semantic understanding, a more advanced technology, allows robots not only to perceive the shape and location of objects but also to comprehend their function and significance. For instance, a robot can recognize that an object is a "table," rather than just a 3D object, or it can distinguish between a "door" and a "wall," even understanding that a door can open while a wall cannot. Through semantic understanding, robots can make more intelligent decisions and behavior planning in complex environments.

While point cloud detection has supported environmental awareness for mobile robots, it still faces some challenges:

- Lack of object recognition: Point cloud data only offers geometric information, so robots cannot understand the type or purpose of objects.

- Poor adaptability to environmental changes: Point cloud data can become distorted or inaccurate under varying lighting, weather conditions, or reflective surfaces, reducing perception quality.

- Large data volume and complex processing: Point clouds consist of thousands of points, which require post-processing, such as filtering and segmentation, to extract meaningful information. This process is both complex and computationally demanding.

To overcome the limitations of point cloud detection, we turn to semantic understanding. This allows robots to not only understand the environment but also respond intelligently to objects, activities, and scenes. By integrating point cloud data with deep learning and computer vision technologies, robots can comprehend the meaning of the environmental data they acquire.

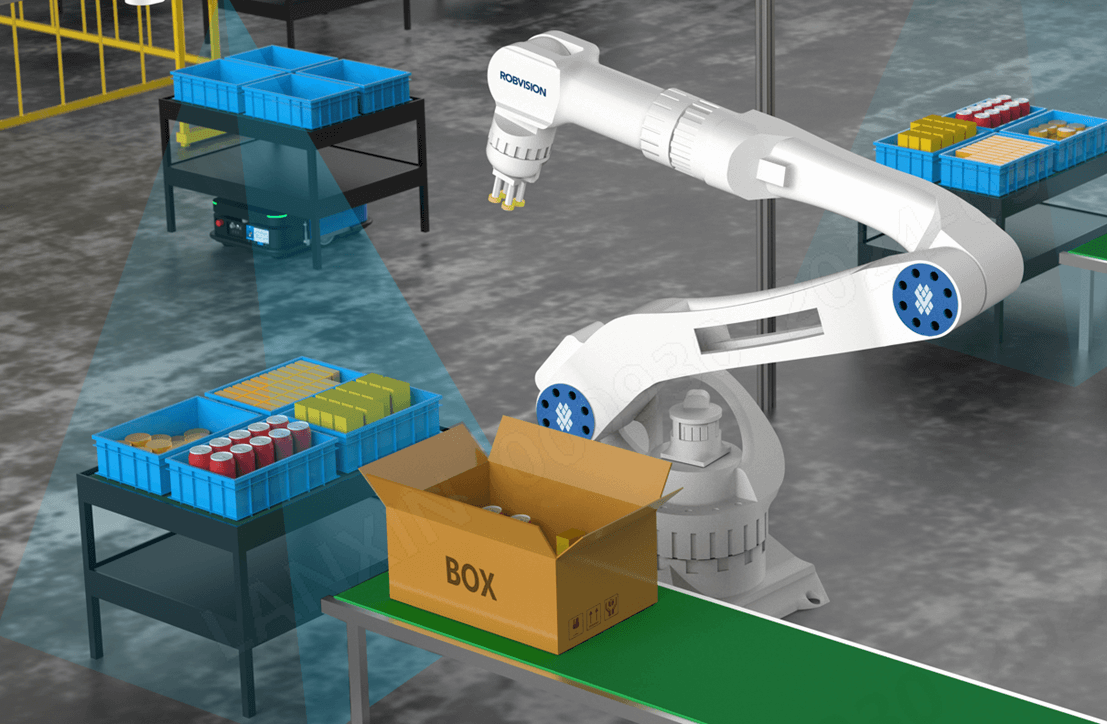

Applications of Semantic Understanding in Robotics

Several mobile robots have already implemented semantic understanding, showcasing its potential:

Autonomous vehicles like Waymo use LiDAR, cameras, and deep learning for environmental perception and semantic understanding, recognizing pedestrians, vehicles, and traffic signs to make appropriate decisions. This technology allows autonomous cars to drive in urban environments, understanding the traffic scenario and making judgments.

Intelligent warehouse robots such as Ocado’s combine visual SLAM with deep learning for environmental perception and object recognition. These robots understand the items on shelves and pick products based on customer demand. This application has enhanced automation in smart warehouses, reducing human intervention.

Service robots like Pepper use computer vision and speech recognition for semantic understanding, enabling interactions with humans, recognizing facial expressions, and providing emotional responses. Pepper is widely used in customer service, retail, and exhibition settings.

Through semantic understanding, robots are becoming more capable of interacting intelligently with their environment, adapting to dynamic and complex situations, and providing enhanced user experiences across various industries.